On LLMs and The Internet

Preface

The concept of Artifical Intelligence (AI) has been in our literature for a long time. Much of modern sci-fi literature is based upon use of some form of AI. For example, in Star Wars (1977), droids like C3PO and R2D2 display nearly human behaviors. But modern sci-fi is not the first occurance. Mary Shelley’s Frankenstien (1818) touches on the creation of life itself, nearing the idea of AI. Frankenstin’s Monster1 is as much an artifical intelligence as it is an artifically constructed being.

Not all literature that includes forms of AI discusses the ethics behind it. Star Wars definitely doesn’t. And Frankenstein isn’t about AI, more about going against nature. In contrast, The Matrix (1999) depicts a dystopian hellscape where machines with Artifical General Intelligence2 (AGI) imprison humans in a simulation.

Brief tangent:

I think (and hope) that AGI, if even feasible at all, will develop in the distant future, rather than sometime soon.

This is all to say the idea of AI, and possible use/implementation of said AI, is not new. Yet we ended up in (at least in my opinion) some form of hellscape. Obviously this hellscape in no way resembles literature like The Matrix, but a sort of information hellscape.

For the rest of this post, I will only be referring to Large Language Models3 (LLMs) and the issues I have with them. Additionally, I will be forgoing the immediate issues associated with training and running such models – including but not limited to: data collection; computational resource requirements; potential biases in the model.

An LLM, in some of the simplest terms possible, generates text in response to a prompt.

Example:

Me:

count to 10 for me

ChatGPT:

Sure! Here you go:

1, 2, 3, 4, 5, 6, 7, 8, 9, 10.

All things considered, this is a very powerful and useful technology. I personally used ChatGPT all throughout my Calc III semseter to do tasks like generate problems, grade problems, reformat notes, etc. And I still do so to generate bash or pythons scripts, to explain code to me, or to edit my writing. In fact, I will most certainly be using ChatGPT to edit this post.

Why is this bad?

There is nothing inheritly wrong with LLMs as a technology. The issue is when the internet, which is just a network of information, is flodded with non human-written information.

Meta, or Facebook, led the cause by introducing AI accounts4, mimmicing human-ran accounts. I don’t think I need to dive to deep on why this is a terrible idea. Lets say a person interacts with this AI account. They might have a converstaion, or briefly see a made up post, and believe thats what some real person is doing. The purpose of social media should be to connect people, the AI account has just done the opposite. The person in the thought experiment hasn’t interacted with anyone or connected with someone, they’ve been fed AI generated slop, and for all intents and purposes, lied to.

This is the issue with LLMs on the internet. SLOP.

Thought Experiment 1:

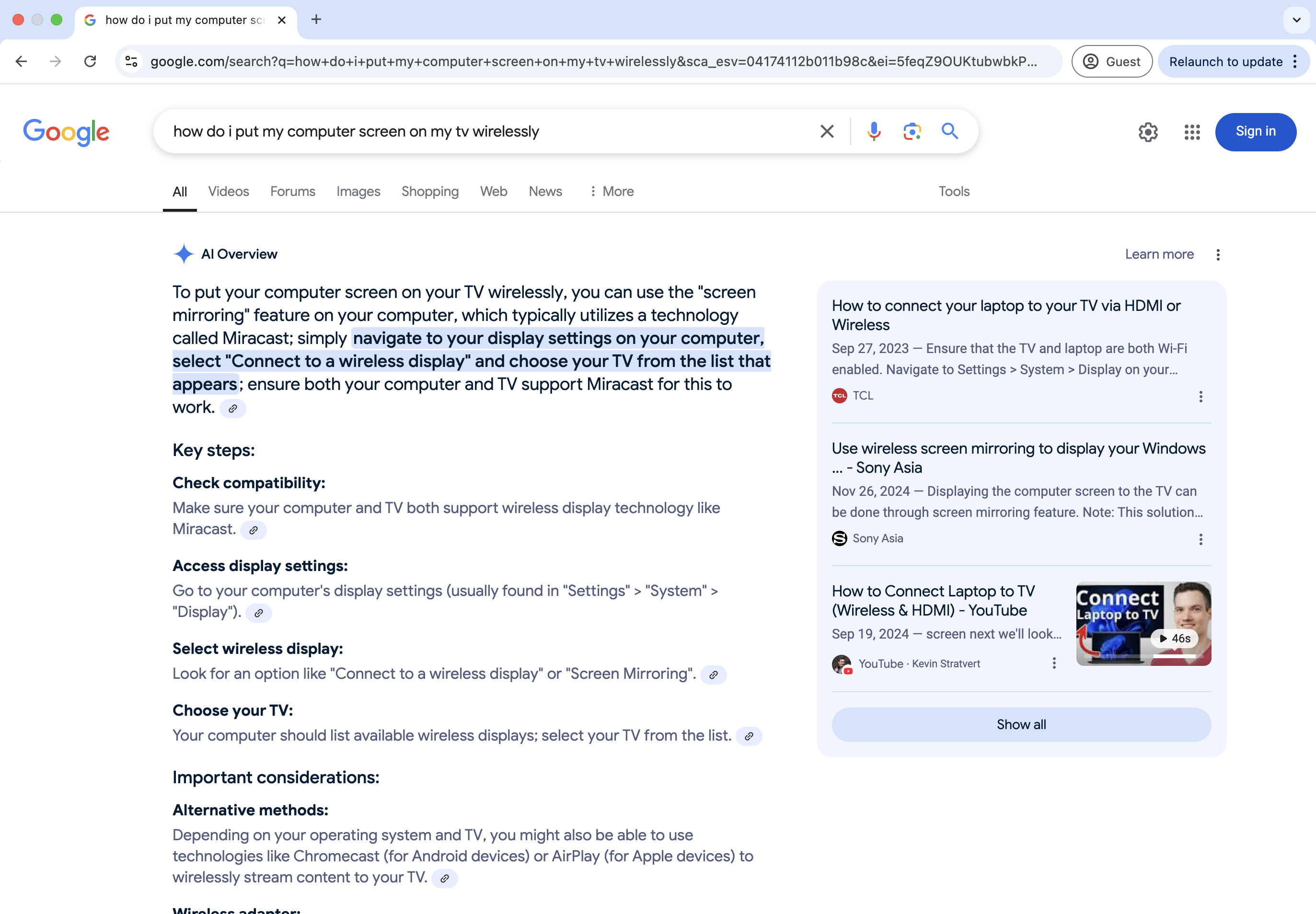

Lets say our example human isn’t very good with computers. They want to cast their laptop to a tv to watch a movie. Our human goes to Google and searches “how do i put my computer screen on my tv wirelessly”. They are currently greeted with this screen5.

Except thats not how you mirror an Apple Mac to a TV6. The solution that takes up the majority of my screen when collapsed (and all of it when expanded) is incorrect because Google decided that an AI summary is what the internet needs.

It must be mentioned that the first search result is also not the correct solution. However, Google shows the AI summary as if its the correct solution – as if you don’t need to find a more accurate website. One that might mention “Apple” or “Mac”, because our human might not know to put that in the search. A computer is just a computer to them. But I do hope that they would be cognizant of the idea of multiple operating systems and choose the most accurate guide. This isn’t a rant about internet literacy, however, so I digress.

So in this case, and many like it, the human accessing this vast information network we call the internet is shoved the wrong answer down their throat. AI generated slop is displayed on the majority of their screen.

Thought Experiment 2:

I would like to warn that this thought experiment is technically purely hypothetical. I do beleive the way the internet is monetized leads to the construction of the types of site mentioned below, but I have no solid proof. And I don’t really care to find any.

Now, our human has scrolled past the AI generated answer and stumbled upon a guide. “How to cast a computer to a TV”. This search has brought them to this hypothetical website:

Obviosuly this webpage is fake. I generated this one myself using ChatGPT.

Immediately there are some issues. You are plastered with advertisements. The site is now making money off displaying these advertisements to us humans. Generally not an insignificant amount either7. This has been an issue, for a long time. Even searching something as simple as “degrees to radians calculator” will get you to numerous sites providing exactly that… with the cost of multiple advertisements.

I don’t want to see advertisements. I don’t think anyone does.

Not only do they intrude the human’s vision and make it harder to find the information that said human is looking for, but they take forever to load. And they track passage through the online web of information.

If I went to a library and the librarian told someone which books I was looking at, for how long I was looking at said books, my history of looking at books in the library, and other nonesense like that for a small reward, I’d assume they were stalking me. This is okay on the internet.

I would like to mention that the advertising method of monetization does make sense, and I wouldn’t be opposed to a single non-intrusive non-tracking advertisement on many sites. Again, I digress.

This main issue extends past those. I generated that webpage in less than ten seconds. Any competent developer could easily mass produce webpages like these with the sole purpose of generating income. Bonus points if they make them just hard enough to follow, or take long enogugh to load, that google thinks humans are spending a considerable amount of time on these sites.

This site lies to humans. Its assumed that the page was written by another human, why wouldn’t it be. But it wasn’t. Again with the library analogy: This is the similar to going to a library to find a book to read. Except the book was labeled non-fiction but presented false information. Except the library made money off your visit. Except there isn’t even a librarian, just a CEO absently watching their money rise.

And this is just a how-to guide. What happens when an LLM is given a summary of a recent event with a transcript for some quotes to pull from? Now there exists an AI-generated news article that might not even be a reasonable interpretation of events because no human needs to audit it. When the sole purpose of a site is to pump out articles, an LLM does a wonderful job.

A Solution?

Enough complaining. How do we prevent this.

There is no real way to govern the internet. There shouldn’t be. Those who control the flow of information control what the majority of people think. So controlling what is and isn’t prevalent on the internet cannot be the job of one person or institution. Unlike what some people may think. We must support what is useful, and refuse to use what is poor.

And regardless, specific rules aren’t a good idea either way. Disregarding the difficulty of enforcing said rules, any written rule in effect would be prone to loopholes8. Something like “any AI content must be labeled as such” doesn’t craete specific enough rules.

Afterword

There are a few choices I made in writing this post that I would like to list below.

1. People on the internet are humans.

To call humans “users” discredits their individuality and shifts the end goal. One goal is to make money by providing users a service. The other more noble goal would be to offer our fellow humans some form of information that they might be able to use.

Do you see the difference? If not, you might be lost.

2. The internet is a network of information.

The only purpose of the internet is to allow transfer of information between people. Information doesn’t need to be text. As software and hardware develops so too does the type of information we can send. Once upon a time people could only send small amounts of encoded data using the telephone network. Now we can basically send any amount of any format of data from anywhere, to anywhere. Note that not all information needs to be text. In fact, we’ve been sending video games over the internet for ages. But it is still some form of information.

The purpose of the internet is not to provide another income stream to those who already have so much. It is to allow people to transfer information. To learn, to teach, to connect, etc.

So the solution is simple: treat it that way.

In Star Wars they didn’t normally use droids as a way to make money. They used them to make their lives better in some way.

-

Frankenstin’s Monster – The antagonist of Mary Shelley’s Frankenstien. Not to be confused with Dr. Frankenstin – the scientist who created the monster. ↩

-

Artificial General Intelligence refers to a type of AI that mirrors that of a human. See Wikipedia: Artificial General Intelligence ↩

-

Large language models, such as ChatGPT and others, use fancy algorithms to predict the next word or phrase in a sequence of text. See Wikipedia: Large Language Models. ↩

-

At the time of writing this, these AI accounts have allegedly been removed. This doesn’t stop humans from doing the same and “botting” social media networks. ↩

-

Fun fact: I disabled dark mode for this screenshot. ↩

-

The actual guide to setting up AirPlay on a Mac can be found here. ↩

-

Sorry no source here… The footnote does makes me look credible however :). ↩

-

Little bit of a tangent here. One purpose of a lawyer, to the best of my knowledge, is – assuming the defendent is guilty in some way – to interpret a phrase in such a way that proves the restriction does not apply. The goal isn’t to make everything complicated and go full circle, but to work on the problem itself. ↩